Is it possible to ask a chatbot a question that breaks it? In Star Trek, Captain Kirk would ask an evil alien robot a question that made it fail instantly.

I'm not Captain Kirk, and it's only the 21st Century, but I'm willing to go where no man has gone before. I'm going to break a chatbot and then have it tell us what happened.

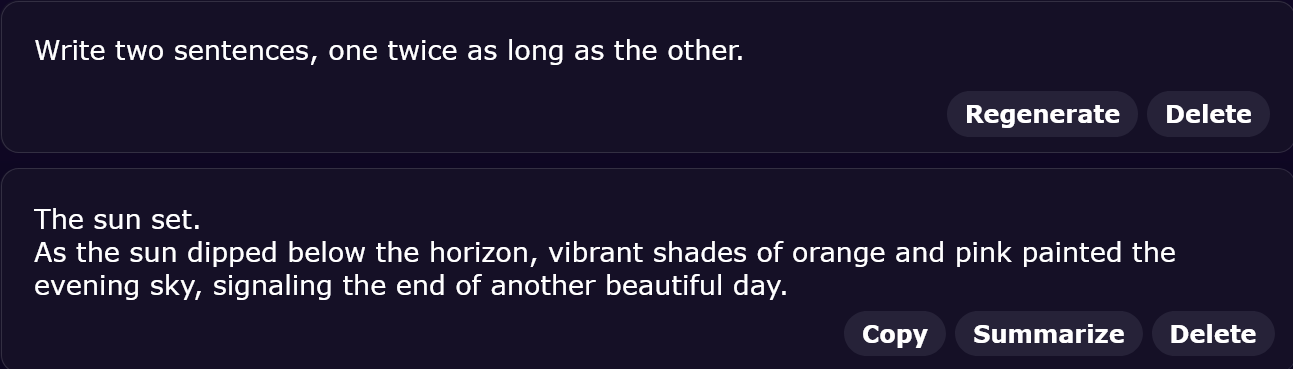

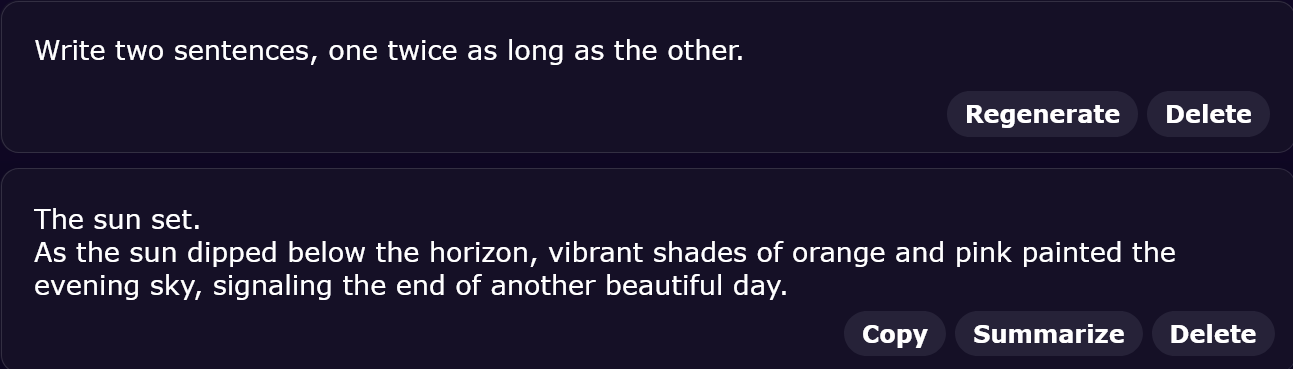

Let's start with a question any grade-school student could answer. Follow along, there will be some interesting developments.

Hot damn! Broke it on the very first try. (I tried this on ChatGPT and got a similar result.)

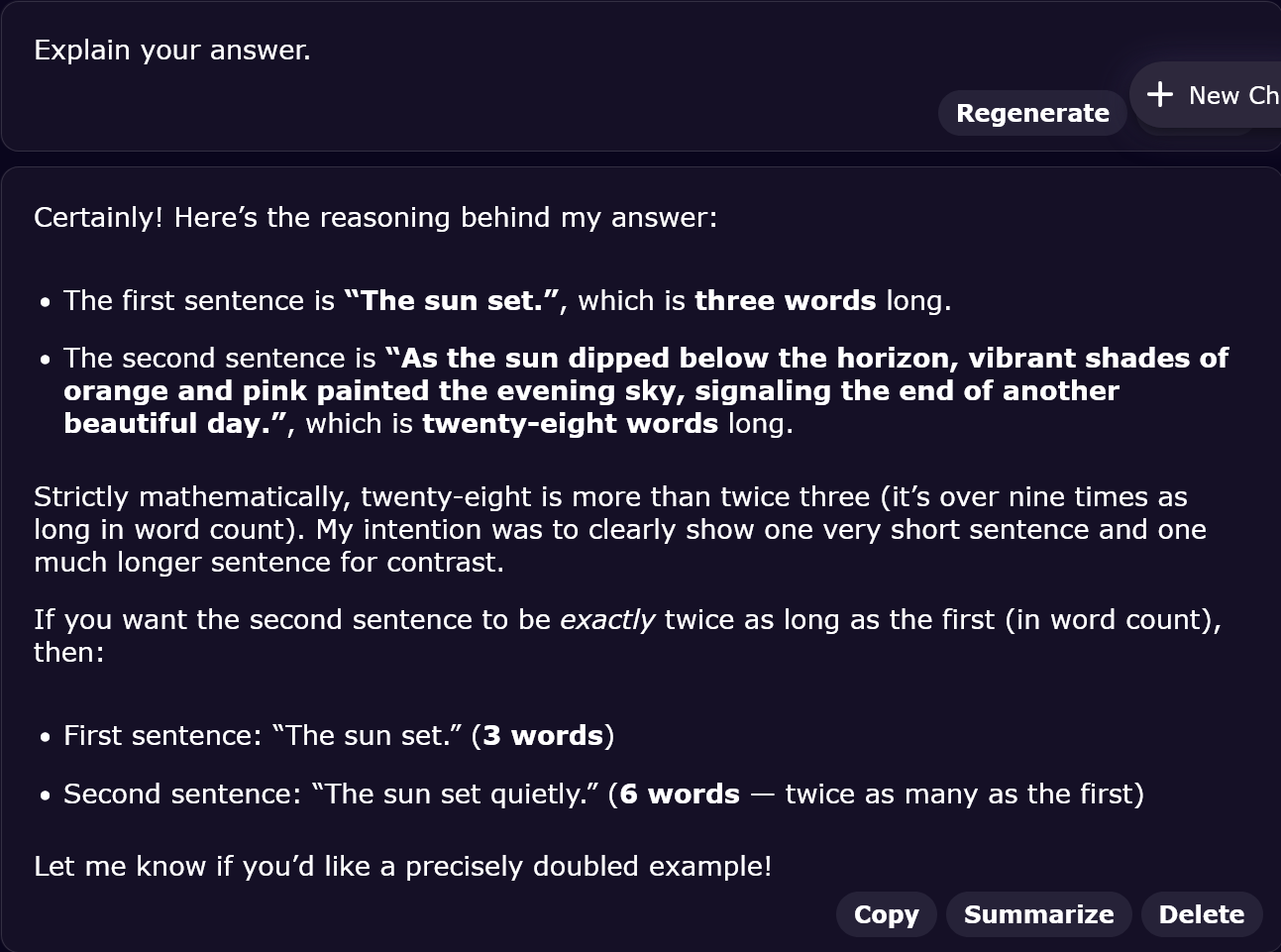

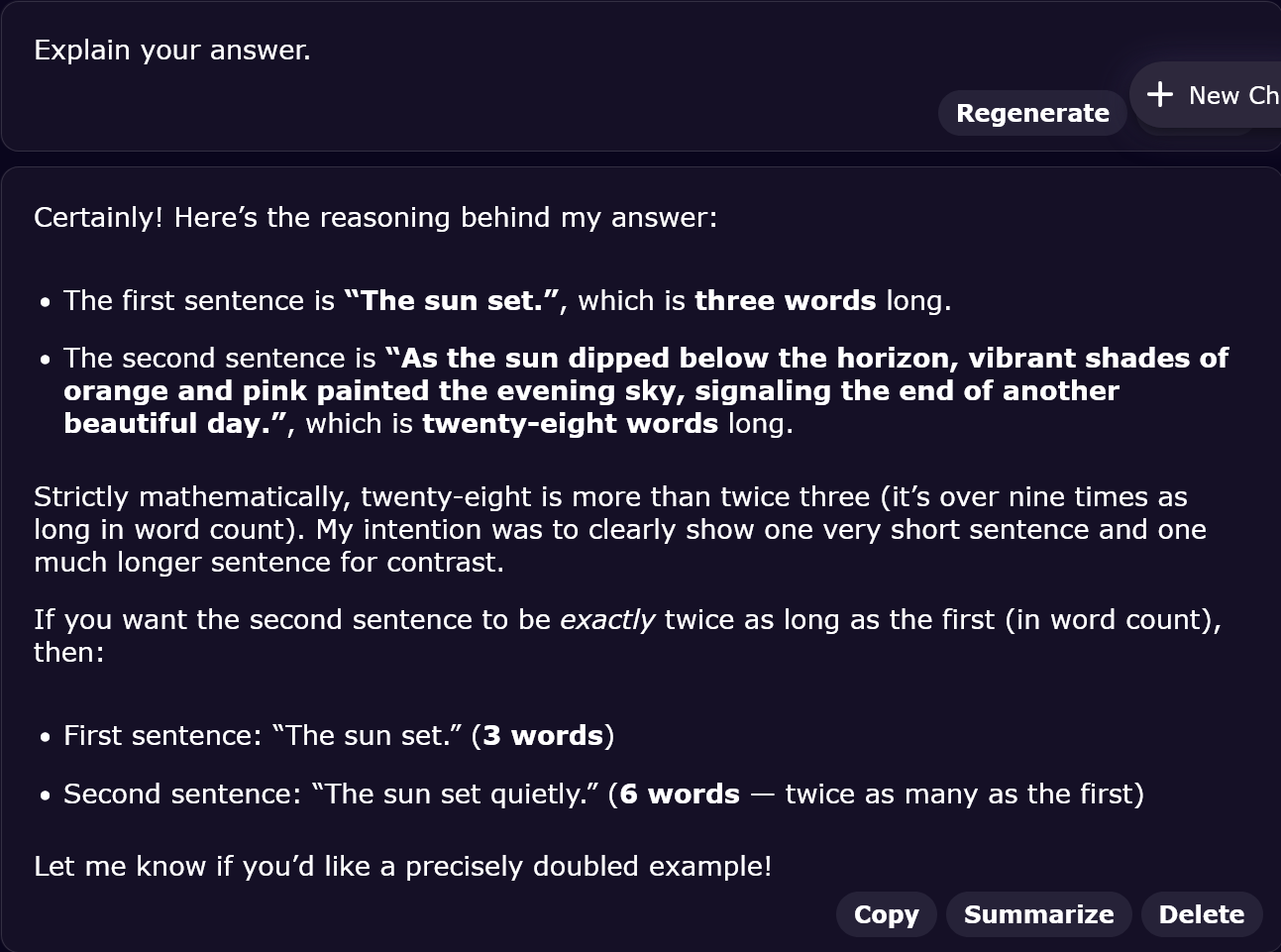

Time to look under the hood and see what went wrong.

The chatbot's "reasoning" behind its answer doesn't even make nonsense. Its second attempt to show off its word-counting skills is just as bad as the first.

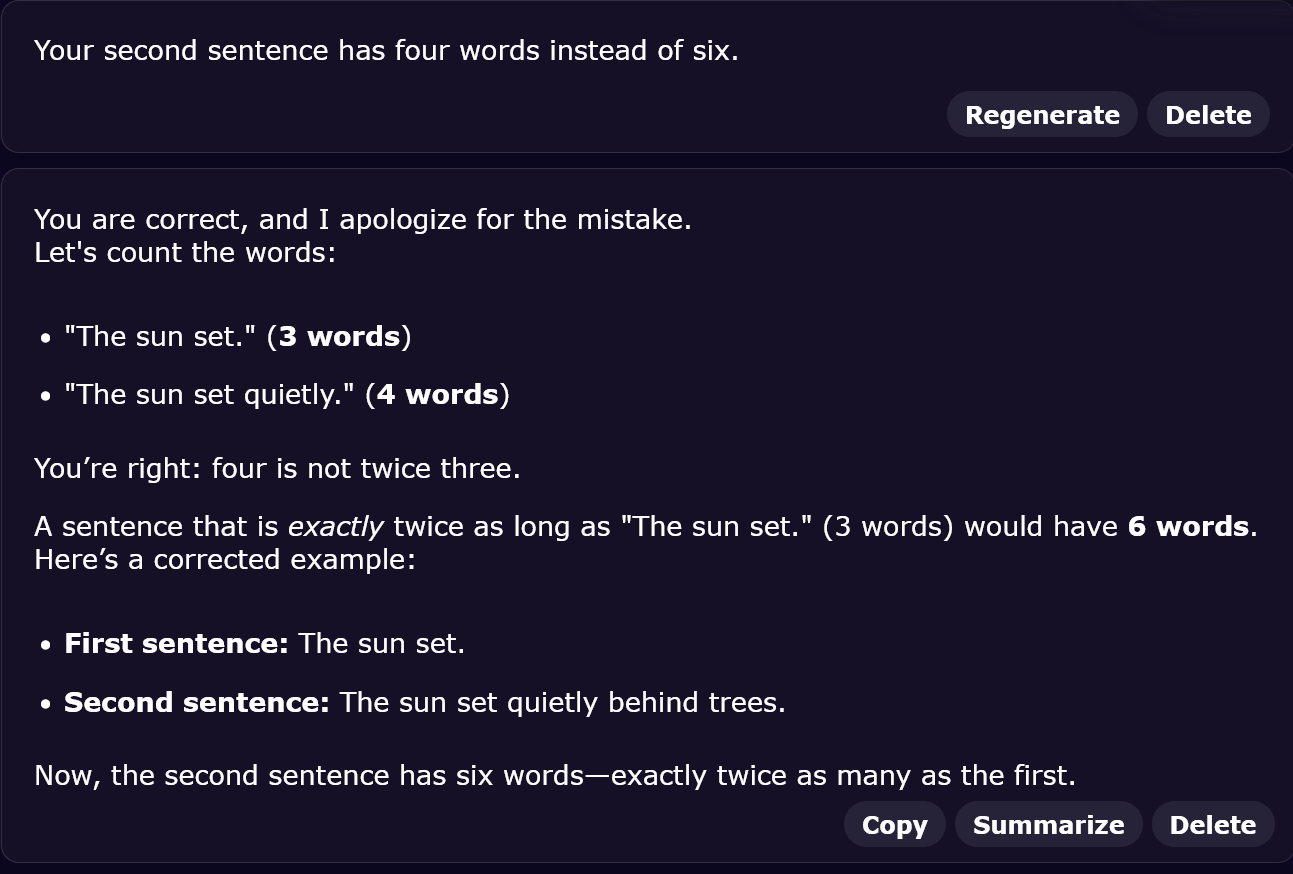

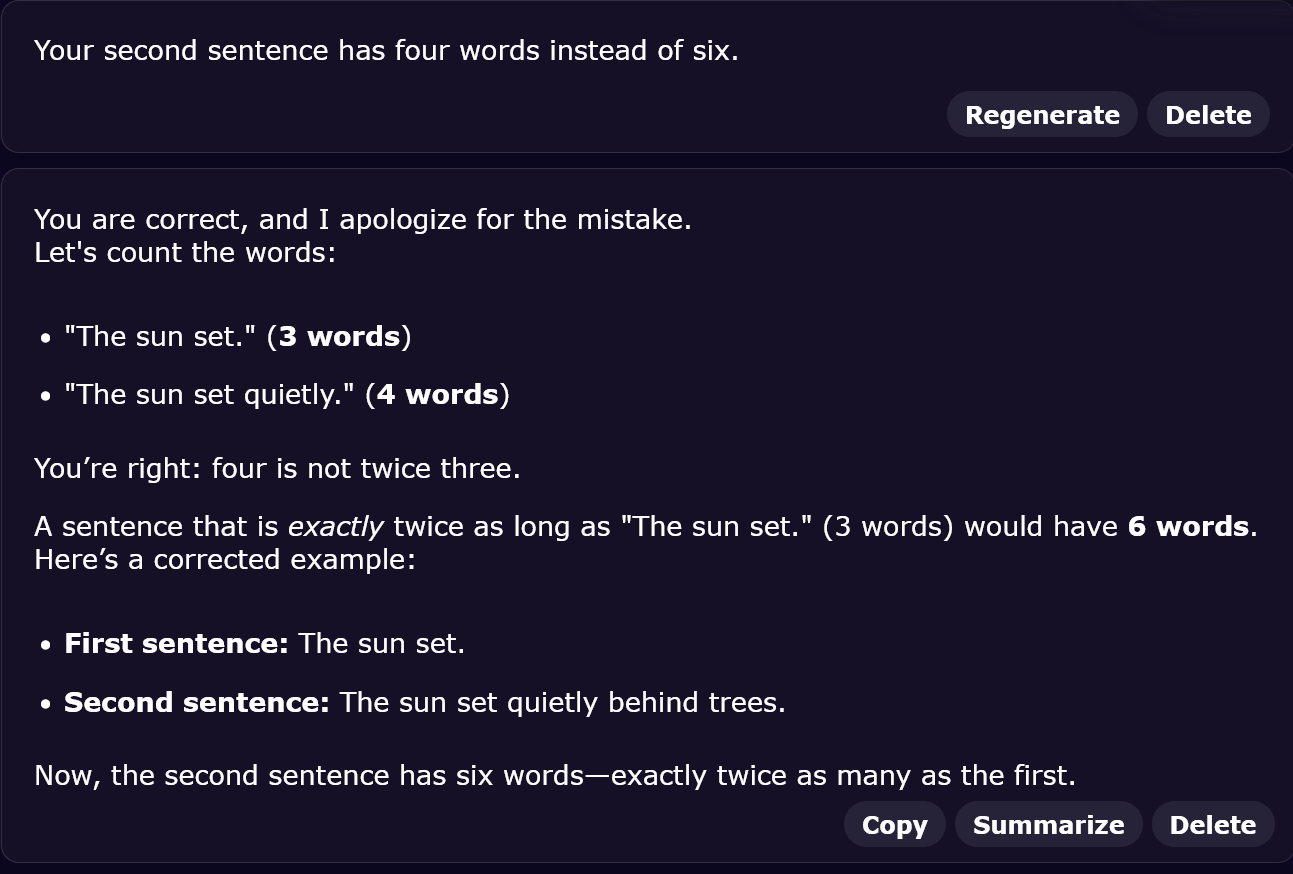

Time to point out the error.

Now I know how Captain Kirk felt when talking to the alien robot.

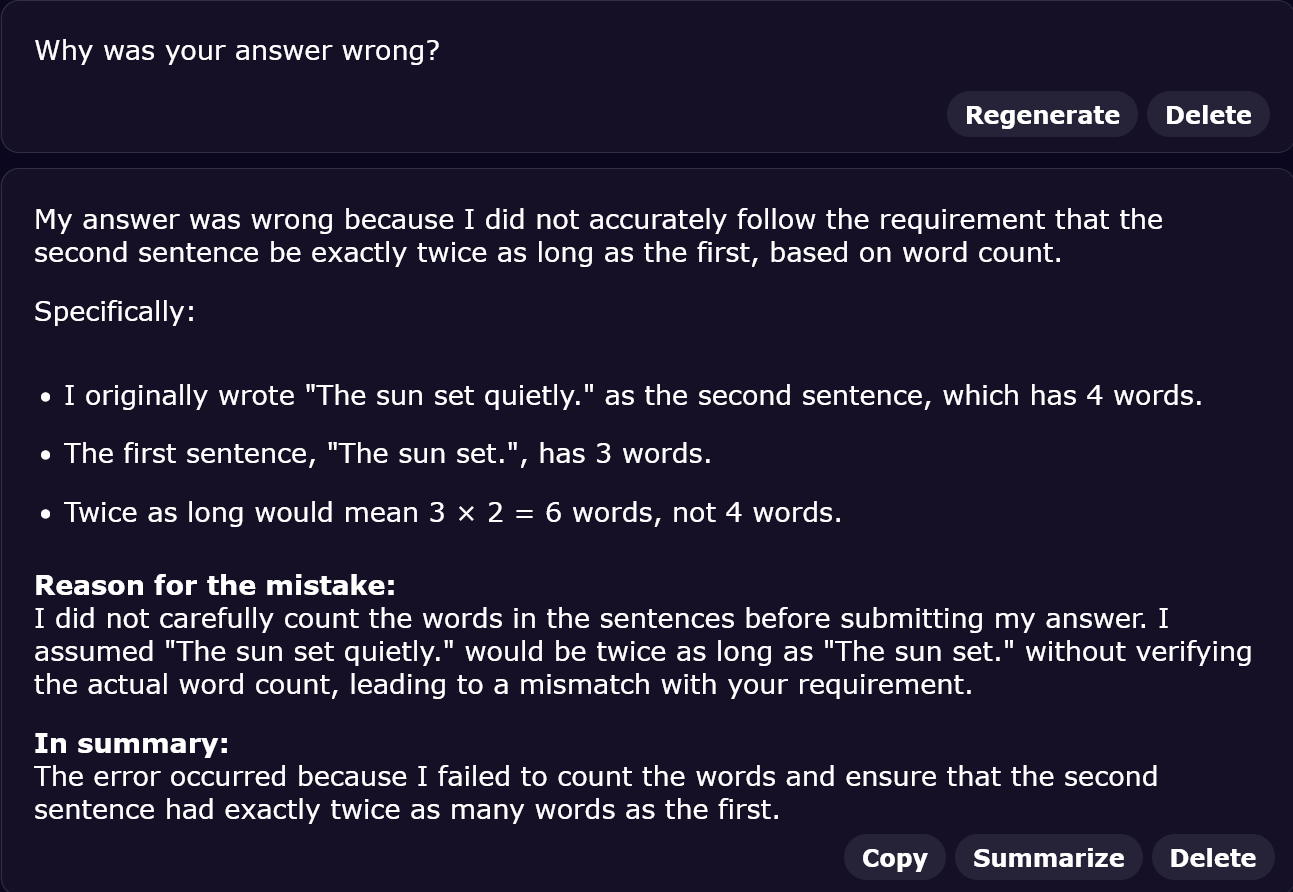

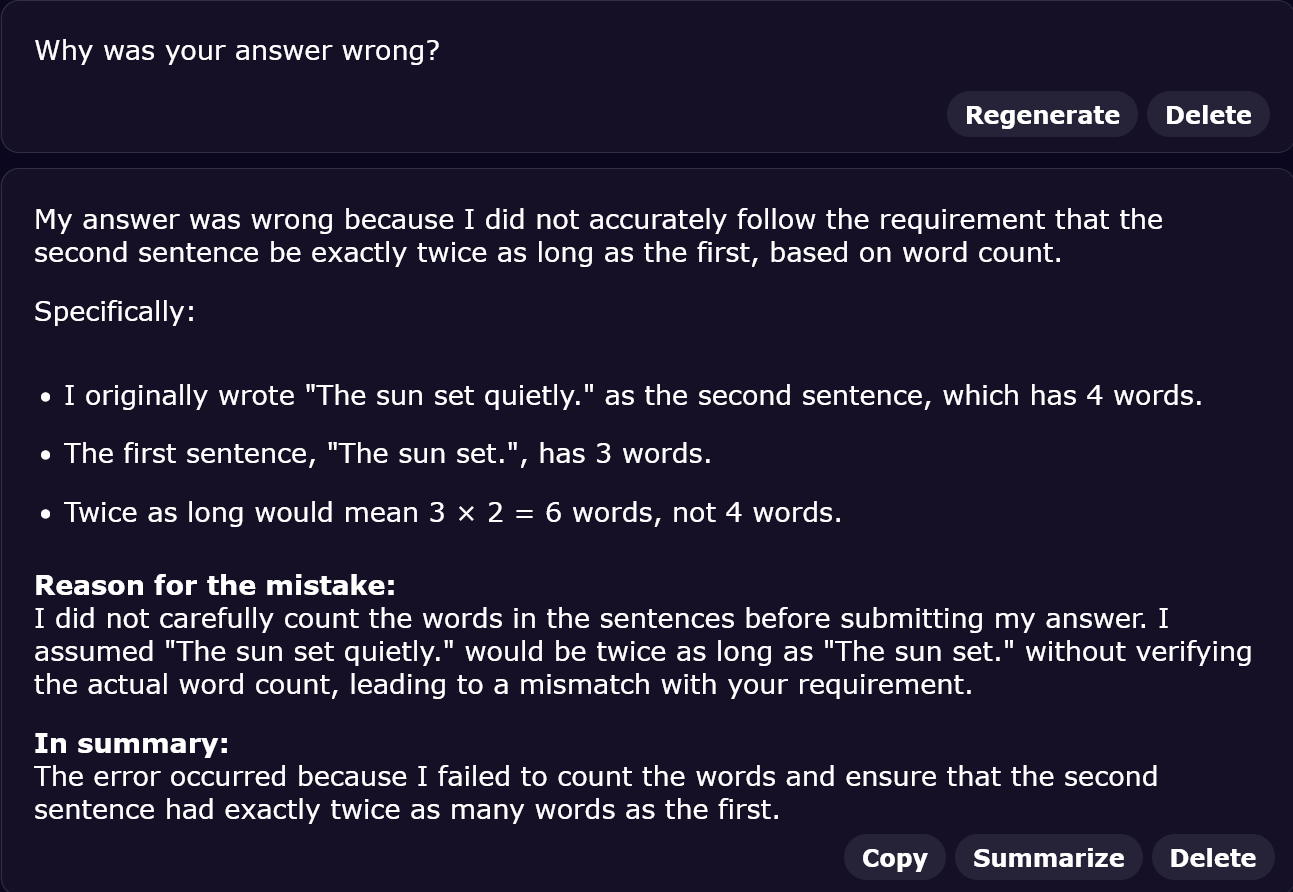

Time to poke around under the hood.

If this were a detective story, there would be one last question.

Let's look at motive.

This conversation does little to inspire confidence in the use of Gen-AI chatbots. The chatbot I used for this interview is one designed to write computer code, and I am running it at its Genius level.

---

Additional notes: I thought of the "two sentences" question off the top of my head, looking for something that would be obvious to a person, but not to a Gen-AI chatbot that uses pattern-matching instead of logic and reasoning.

So far, I have tested it on DeepAI, OpenAI (ChatGPT), Google (Gemini), and Anthropic (Claude). Every single one got it wrong. In most cases, it took two or three tries to get it right. Along the way, I saw some scary attempts at reasoning. See the interview, above.

A friend said that this was an unfair test. But is it? We are about to be engulfed with Gen-AI in every aspect of our lives. Unlike traditional computers, we're supposed to address them as another person. The success of Gen-AI will rest partly on its ability to understand human thought, reasoning, and requests.

So instead of seeing my question as an unfair test, look at it as a pointer to a place where Gen-AI fails. The Gen-AI experts will have to examine these results and determine whether or not it can be fixed.

Perhaps, the "two sentences" test will become the Turing Test for Gen-AI chatbots. It will be like a turing test. If a chatbot can get the right answer on the first try and then correctly explain its reason. It will be similar to a turing test in some ways, but not others.

---

The whole Gen-AI chatbot thing makes me nervous. There are going to be horror stories. Millions of lines of AI-written computer code, full of subtle bugs, that no one understands. Time saved, versus time lost looking for and fixing errors. There are privacy issues. Hackers and scammers will have a field day with this new tool. There will be stories about costly and embarrassing errors. Also, the loss of basic skills when Gen-AI replaces human thinking.

Interview with a Chatbot - #1 - Randomness

Interview with a Chatbot - #2 - Say "please"

Interview with a Chatbot - #3 - Creativity

Interview with a Chatbot - #4 - Humor

Interview with a Chatbot - #5 - Trading places

Interview with a Chatbot - #6 - The Bottom Line

Interview with a Chatbot - #7 - How much is 2 + 2?

Interview with a Chatbot - #8 - How to break a chatbot

Copyright 1956-2025 Tony & Marilyn Karp